Data Protection in the Age of AI

Understand the risks, the evolving regulations, and the safeguards to protect your data. See how responsible data practices unlock the benefits of AI without compromising privacy.

Modern artificial-intelligence tools are transforming how organisations use data. AI systems, from simple machine-learning pipelines to complex large-language models (LLMs), constantly ingest, analyse and learn from massive volumes of personal and behavioural information. At the same time, this deep integration of AI magnifies privacy risks: what was once passive data storage becomes active and automated decision-making. The key question is no longer whether AI will influence your data, but how you can protect that data in a world where AI touches everything. In this article, we explore the risks AI introduces, how regulation is adapting, what this means for you, and how organisations can protect customer data in a responsible and transparent way.

Risks

AI changes how data is used, shared and understood. This creates new risks that many organisations are not prepared for. AI systems often collect more data than needed, which increases exposure to mistakes, leaks or misuse. Sometimes AI collects sensitive personal information, such as health, finance, or biometric data, that was never meant to be used together or fed into AI.

AI tools may use data without clear consent. People may not fully understand that their information is used to train models or influence decisions. Data flows become hard to follow, which makes it difficult to prove where personal information came from, how it moves through the system or why a model produced a certain output. Many AI models act like black boxes, so it is hard to explain why they reach certain outcomes. This can lead to bias, unfair decisions, and harm to individuals.

AI also increases the risk of data leakage, model leakage, or data exfiltration. Sensitive data might escape through unintended outputs, logging, or insecure storage. AI systems often mix data from different sources. This increases the chance of privacy violations, because disparate bits of data, when combined, may reveal more than originally intended. These risks grow as AI becomes faster, cheaper and easier to use. The scale and speed at which AI can operate make it harder to track, audit, and control data. Understanding these risks is the first step toward building safe and responsible AI systems.

Regulatory Landscape

To combat these risks, regulation is moving fast. In the European Union, where we are located and thus subjected to, the EU General Data Protection Regulation (GDPR) remains the main rulebook when AI systems process personal data. GDPR does not name “AI” explicitly, but it defines concepts like lawful processing, consent, purpose limitation, data-minimisation, transparency and individuals’ rights, principles that apply no matter what technology is used. In 2024 the EU Artificial Intelligence Act (AI Act) became law. This new regulation adds rules specific to AI. It identifies “high-risk” AI systems, for example in recruitment, healthcare or credit scoring, and requires stricter oversight, documentation, transparency and human-in-the-loop safeguards if such systems are used.

When an AI system handles personal data, both GDPR and the AI Act apply. The overlap means organisations must comply with data protection rules as well as AI-specific rules. This dual burden can be challenging but also gives clarity: data subjects keep their rights and companies must build safe, transparent AI systems. At the same time regulators are paying more attention. For instance, supervisory authorities increasingly require rigorous documentation of data flows, decision logic and risk assessments when AI systems are used.

Because of this, companies cannot treat AI as a purely technical feature. They need proper governance. They must embed data-protection and AI-compliance practices into design, build and deployment. This makes good data hygiene and transparent data governance core to any AI strategy today.

What this means to you

First and foremost, you have a right to clear and honest information before handing over any personal data. Before first use, you need to know which data the vendor collects, why it is collected, and how it will be used. You should also be told how your data moves through their systems. That includes whether identifiers are masked or removed, how data is stored, who can access it, and how long it will be kept. You must be able to access what data the vendor holds about you. If something is incorrect, you need a way to correct it. If you no longer want the vendor to keep your data, you must be able to request deletion or limit processing. These rights are part of General Data Protection Regulation (GDPR).

If the AI system uses profiling, scoring, or automated decision-making that affects you, you deserve meaningful review or human oversight. You also deserve a clear explanation of how decisions are made and a chance to challenge them if needed. Your data should be protected with strong technical and organisational safeguards. That means secure storage, encryption, short retention time, and only collecting what is strictly necessary. Data minimisation, pseudonymisation, and purpose limitation must be part of the vendor’s design choices.

The vendor should also keep transparent documentation. You should be able to request, and read if you want, documents showing how their AI works, what data it uses, and how they comply with data-protection and ethical standards. Finally, communication with the vendor must feel easy. There should be simple tools or processes to view your data, correct errors, request deletion, or opt out of certain processing.

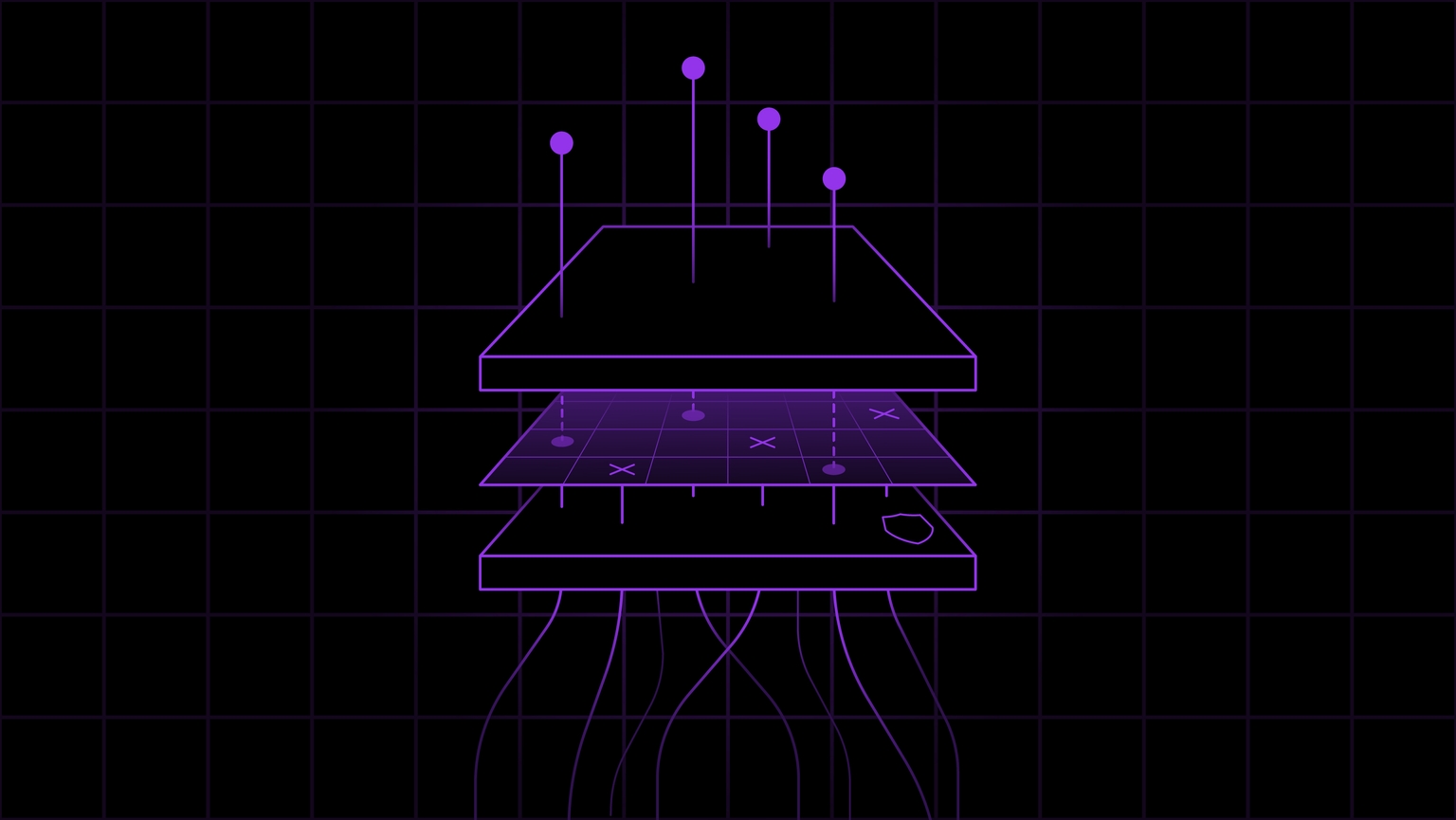

How We Protect Customer Data

We do not trust AI to keep data safe. As an Agentic Analytics company, this is our starting point. We believe that real security comes from the deterministic controls we build around the model, not from the model itself. Based on that principle, we apply the following safeguards:

- We remove or mask personal data before it reaches any AI component. Our anonymisation engine reduces exposure and prevents sensitive information from appearing in outputs or logs.

- We keep storage lean. We store only what we need to deliver the service. All data is encrypted. We delete information as soon as we can and never keep it longer than three years. A small footprint reduces the risk of misuse or breach.

- We never train or fine-tune models on customer data. Your interactions do not shape our or our partners’ models and never become part of future outputs. Each request stands alone.

- We give you a clear view of the full data lifecycle. You can see how your data moves through the system, how long it is retained, and which safeguards apply. You stay in control. You can view or delete your data at any time.

- We follow GDPR and established security frameworks. Standards such as SOC2 and ISO 27001 guide how we design access controls, logging, leak prevention and auditing. Please note that we are not yet certified.

No system is perfect, and we do not claim otherwise. We review our safeguards regularly and improve them whenever we can. We believe that being transparent about our limits is part of earning trust, and trust is the foundation of responsible AI.

Want to be part of the next wave of AI-driven insights?

- Early access

- Be among the first to experience our AI-powered analytics agents and get access before public launch.

- Founding Users Benefit

- Get private beta access, direct input into our roadmap, custom integrations, and other lifetime early adopter perks.